AI Overview: Neural Networks, Machine Learning, Deep Learning, Use Cases and Trends

You have probably been sent through a wormhole from a distant past if you still haven’t heard of how artificial intelligence and its numerous applications are already changing people’s lives. Leading tech giants like Google and Apple use it for their voice (Siri & Google Assistant) and image recognition software. Netflix and Amazon use modern AI technologies to compile our lists of recommended products and watchlists, target ads and make our user experience more and more enjoyable and convenient. MIT and other research centers and companies use AI to analyze huge amounts of data, organize and analyze it to make predictions.

And this is just the tip of the iceberg. Artificial Intelligence and its major implementations: machine learning and deep learning, are being used in many other industries: Agriculture, Business Operations, Consumer, Education, Healthcare, Transportation among others.

Definitions: What is AI?

Artificial intelligence, neural networks, machine learning and deep learning. These terms have been mistakenly used interchangeably by some people for quite a while now. All of them have to do with machines performing operations that were formerly performed and normally expected to be performed by humans. But computing mechanisms have gone through such an evolution that they are now capable of outperforming people in most tasks that involve serious computations.

Artificial intelligence was historically introduced first and is the umbrella term, while machine learning and deep learning came quite a while later. The first talks of artificial intelligence date back to after the Second World War. As soon as the first machines appeared that were capable of performing millions of operations per second, predictions and animated talks of forthcoming AI equivalent to the human mind were booming. But even decades later and after huge leaps forward in technology, software and hardware development, we still haven’t even touched the tip of full AI potential.

Over the years we have seen many approaches to implementing AI, some of which were based on pure logic and programmatically defined mechanisms, others rested upon the fact that artificial intelligence systems must mimic the human brain in order to become “truly intelligent”.

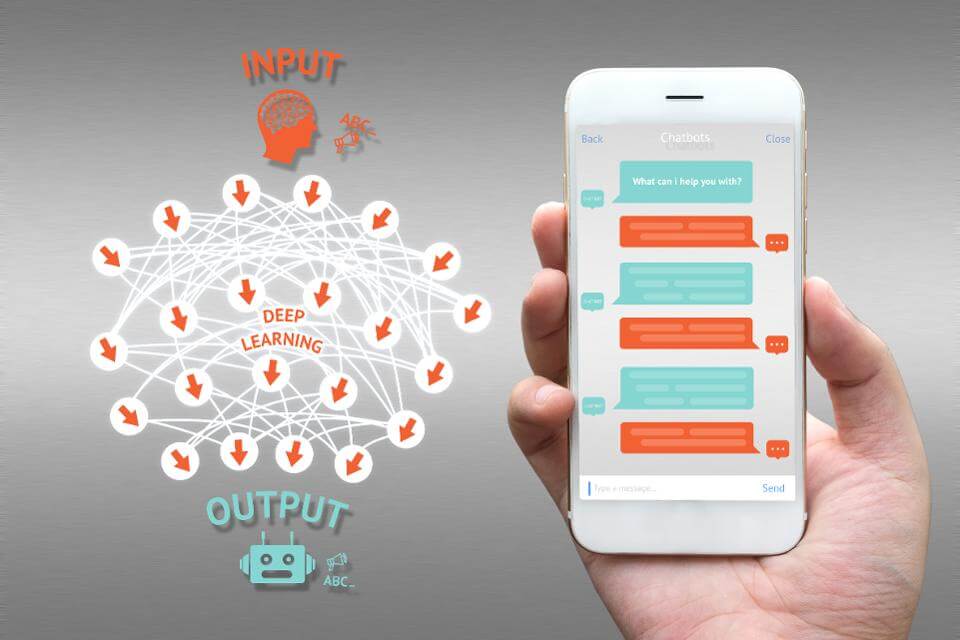

Machine learning and deep learning are implementations of AI based on the concept of neural networks. Neural networks are the next-generation computing systems inspired by biological neural networks of our brain that represent a collection of connected units or nodes. These nodes much like the actual neurons of our brain interchange signals from one another, implementing learning algorithms based on the data received.

Machine learning is an implementation of AI based on these algorithms. Deep learning is a subset of machine learning, where neural networks consist of multiple layers and are supplied with large volume data sets that are unstructured and contain data of various types. Machine learning and deep learning are currently in the limelight of the AI domain with numerous applications, including the ones we mentioned above.

AI Functionalities

There are many tasks and functions that artificial intelligence systems can be used for and a lot of AI systems are specifically designed to solve a particular task or implement a certain functionality, e.g. face recognition in a smartphone app.

The fundamental functionalities of AI include the following:

- Learning and adapting to the changing environment;

- Analyzing and displaying vital information for domain-specific tasks (Healthcare, Business Analytics, Law);

- Analytic, deductive, inductive, probabilistic and other types of reasoning;

- Interaction with other machines and people to collaboratively solve problems.

Types of AI

Generally, artificial intelligence is classified as follows:

- Narrow AI (or weak AI) is AI that is specifically designed to implement certain tasks, such as chatting, playing chess, Go or providing information of most interest to a person.

- Artificial General Intelligence (or Strong AI) is AI that can perceive, understand, analyze and reason in its environment just like a normal human being would without any constraints. We are still decades away from implementing this kind of intelligent systems.

Another classification can be based on some of AI applications:

- Monitoring - analyzing large amounts of data, detecting patterns and generating useful output for display.

- Prediction - AI can be used for forecasting and creating models of how trends are likely to develop in the future based on the facts provided, enabling these systems to create recommendations and personalized responses. A typical example here would be Netflix’s recommendation algorithm.

- Interpretation - these systems are used to interpret unstructured data in the form of text, images, audio and video. They allow processing large amounts of data from multiple resources. Examples here could be diagnostic software for analyzing X-rays and other medical-related media.

- Interaction with the physical environment - AI enables autonomous systems to navigate and manipulate the world around them. A good example here is autonomous vehicles that analyze large amounts of data from various sources: sensors, cameras, GPS systems and maps to safely and efficiently navigate routes to various locations.

- Interaction with people - this type of AI allows humans to interact with computers the same way they do with other people: using voice, gestures or facial expressions.

- Interaction with machines - This type of AI can automatically coordinate complex machine-to-machine interactions.

Artificial Intelligence Use Cases

It’s hard to think of an industry today that in some way has not been affected by AI. It is impossible to cover everything in this article. There have also been cases where a technology becomes considered non-AI after it has been implemented. Therefore, in this section, we will discuss some of the most interesting latest developments of AI.

Human Emotion Analysis

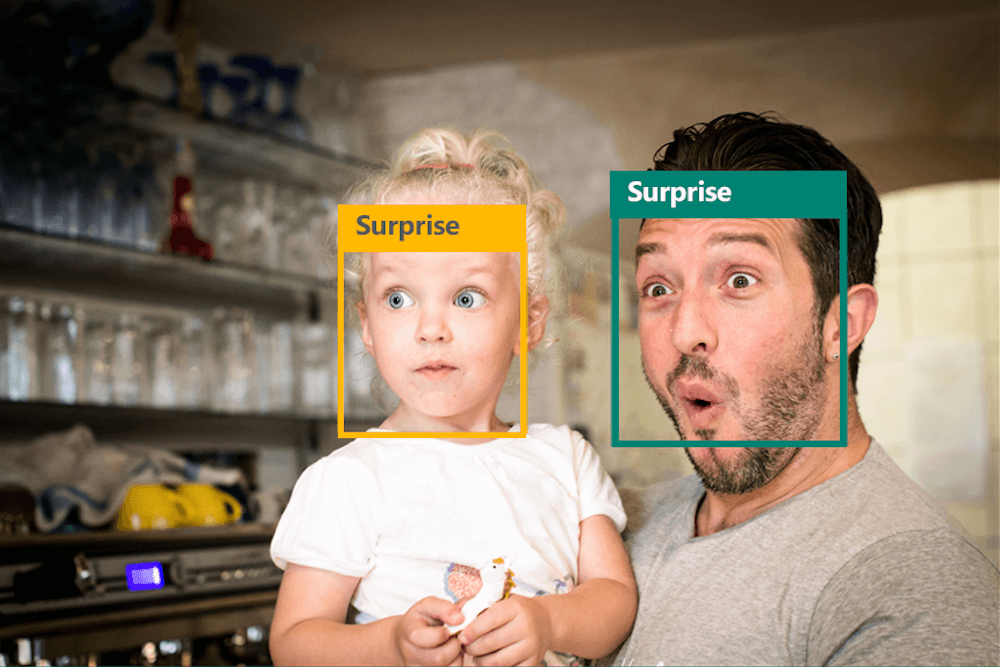

Advances in computer vision make it possible for machines to understand human emotions.

Affectiva is one of the leading companies that has successfully commercialized emotion recognition and is applying it to various vertical markets, including advertising, market research, media and entertainment.

Affectiva offers a cloud-based service that reads facial expressions, essentially offering “emotions as a service.” Affectiva has an emotion database of more than 4 million faces from people across 75 countries, which amounts to more than 40 billion data points. Its software is able to discern the difference between a smile, surprise, dislike, attention, expressiveness, and other emotions. The technology in use is called Facial Action Coding System (FACS), which identifies 21 facial expressions, mapping them to 6 emotions, e.g., disgust, fear, joy, surprise, anger, and sadness.

Hershey has used Affectiva to test a new in-store device called a Smile Sampler that prompts users to smile in return for a treat. Affectiva has also been used in Hollywood movies to test how the audience reacts to certain scenes in a movie. Another big area where Affectiva is being

used is emotion-aware gaming. The bio-thriller game Nevermind, which uses Intel’s RealSense technology combined with Affectiva software, is able to change gameplay based on biofeedback, and help enhance the game using emotion data during playtesting. Affectiva software is also being used in the area of eSports or live professional gaming where emotion sensing is used to gauge audience engagement, as well as the emotional state of players.

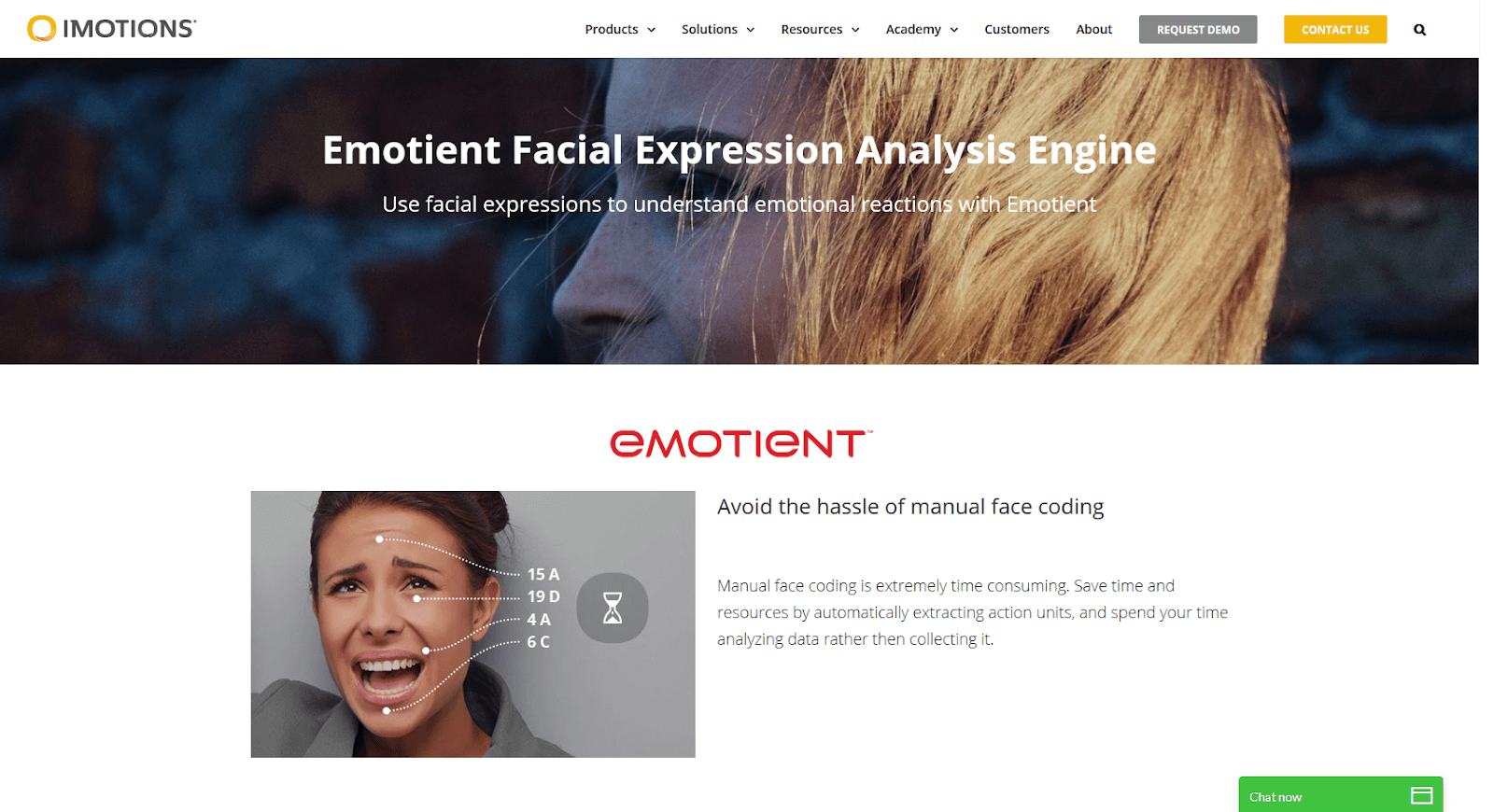

Apple recently purchased Emotient, another emotion recognition software company. The technology supposedly played its role in the new generation of iPhone and Mac software products, like the animated emoticons and face recognition in iPhone X.

Wearable camera company Narrative has developed a game called Autimood, powered by Microsoft’s Project Oxford Emotion application program interface (Emotion API), which uses machine learning to interpret emotions in facial expressions. It allows parents of children with autism to help their children improve their ability to recognize emotions. Autimood uses a wearable camera that takes pictures every 30 seconds, and at the end of the day, the Project Oxford Emotion API tags emotions in each picture so children can attempt to identify emotions and receive feedback.

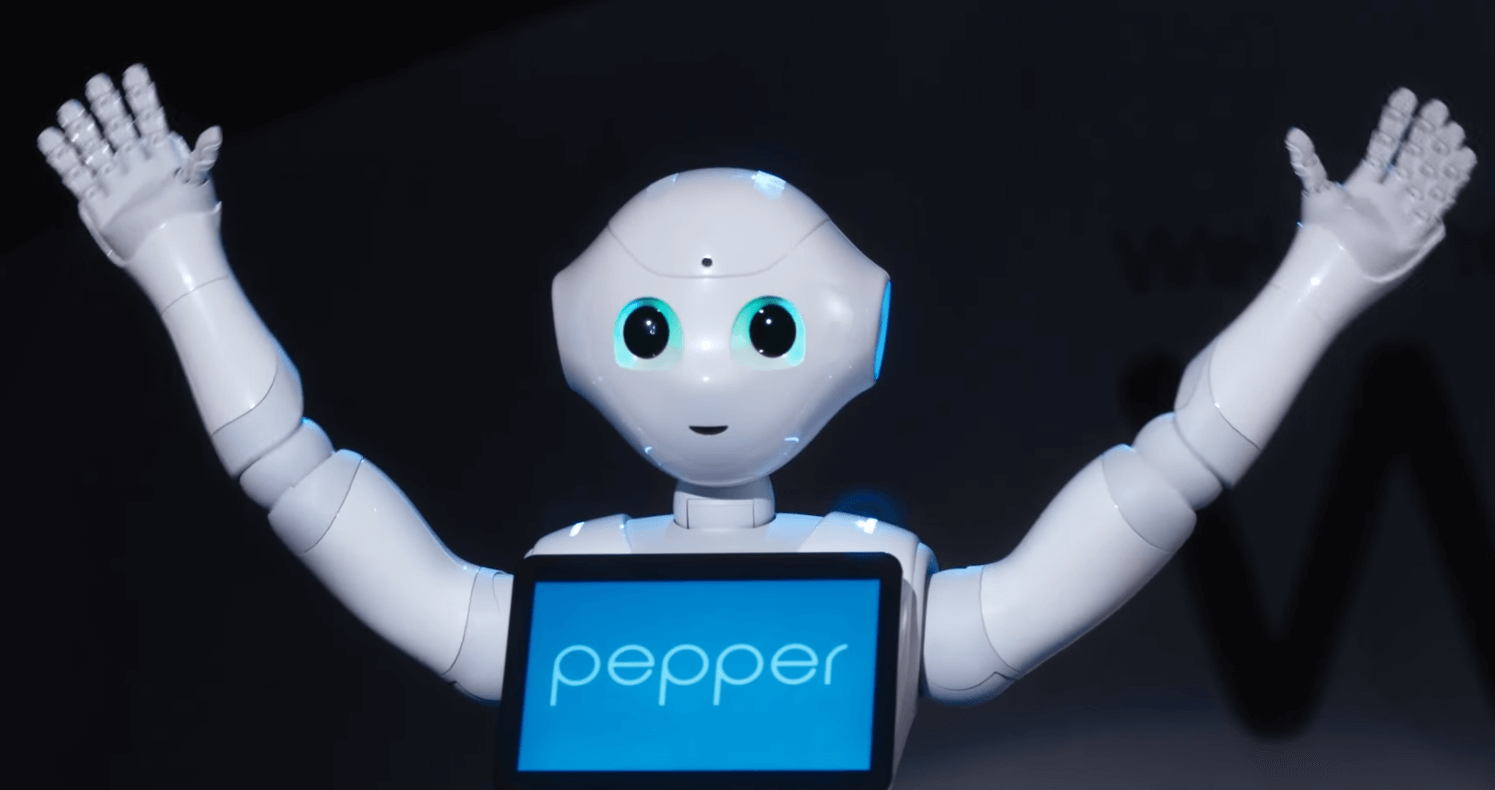

Japanese company Softbank Robotics has used AI to train its household robot Pepper to recognize emotional cues in human voices and facial expressions with computer vision and natural language processing. Pepper, which is four feet tall and moves around on wheels, can use these cues to influence the way it interacts with humans, for instance, when it answers questions or greets guests.

Powering Accessibility

Facebook has implemented computer-vision algorithms to automatically generate descriptive text captions for images and use text-to-speech software to describe the photos to blind users. Though many people with visual impairments use screenreading technology to browse the Internet, image-heavy websites such as Facebook are often difficult for screen readers to interpret, limiting accessibility. Facebook’s solution helps to mitigate this problem.

Weather Predictions For Businesses

IBM has created a machine-learning tool called Deep Thunder that analyzes historical weather and environmental data to provide targeted weather analysis, with a resolution as high as 0.2 miles, so companies can factor weather into business-planning decisions. For example, a retailer can use Deep Thunder to estimate the impact weather will play on consumer purchasing decisions and preemptively adjust its stock of items accordingly.

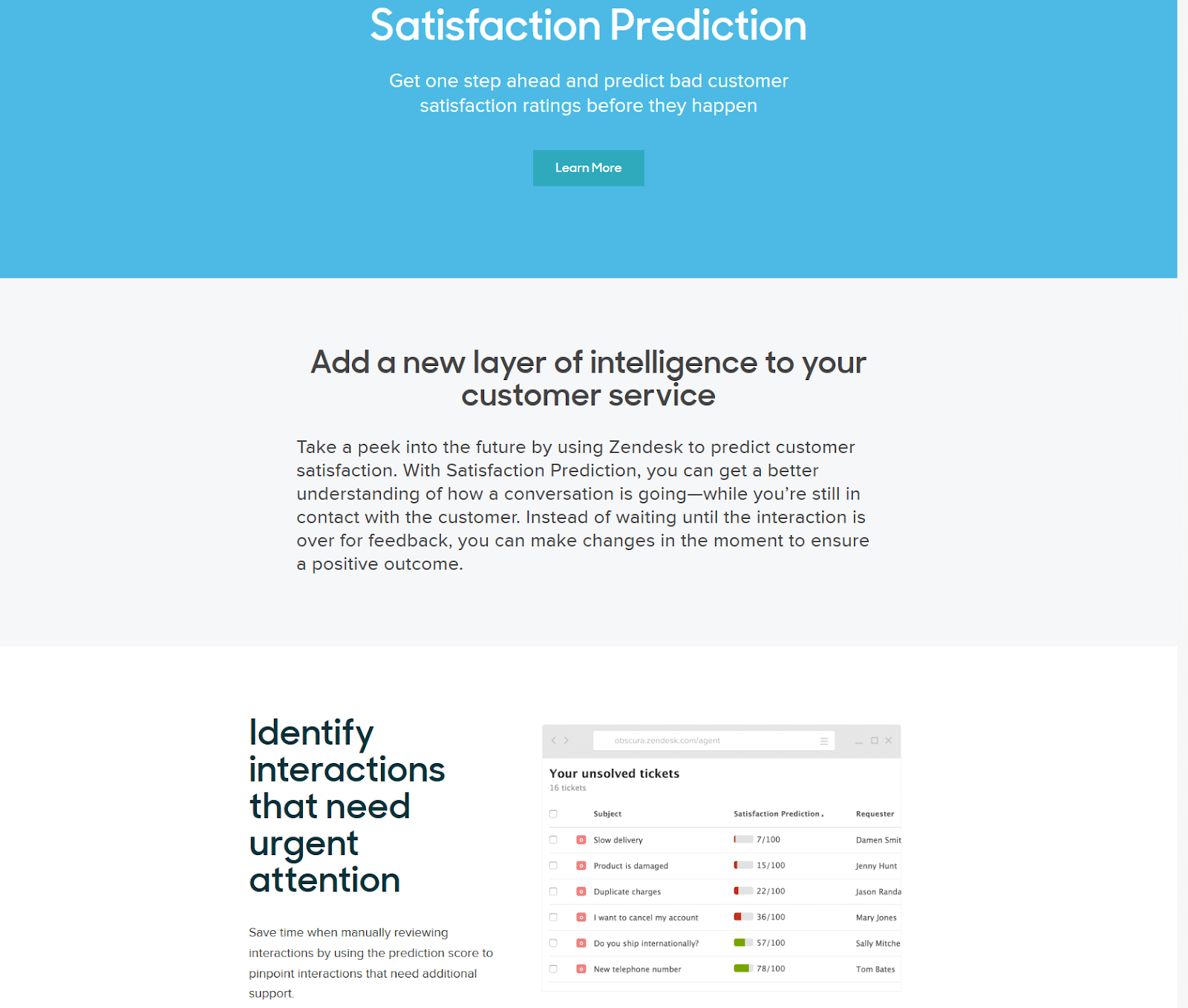

Customer Satisfaction

Zendesk has developed a tool called Satisfaction Prediction that uses machine learning to evaluate customer-service interactions and predict how satisfied a customer is, prompting an intervention if a customer is at risk of leaving a business. Satisfaction Prediction analyzes data such as customer-support ticket text, wait times, and the number of replies it takes to resolve a ticket to estimate customer happiness and adjusts its predictive models in real time as it learns from more tickets and customer ratings.

Assisting Emergency Services

NASA has developed a safety tool for firefighters called the Assistant for Understanding Data through Reasoning, Extraction, and sYnthesis (AUDREY), which uses AI to monitor data about firefighters’ environments to detect signs of danger and help them recover from disorientation to exit a building safely. AUDREY can monitor sensors in firefighting gear and warn firefighters, for example, if it is becoming dangerously hot or if dangerous gases are present, map the surrounding environment, and communicate with firefighters to guide them to safety if they need to evacuate. The more AUDREY is used in the field, the more it can learn about how firefighters operate and make more effective predictions.

Personalizing Education

IBM has developed a new tool based on its Watson cognitive computing platform called Teacher Advisor to help third-grade math teachers in the United States develop personalized lesson plans. Teacher Advisor analyzes Common Core education standards, which sets targets for skills development, and student data to help teachers tailor instructional material for students in the same class but with varying skill levels, which can make traditional, static lesson plans ineffective. As of September 2017, elementary school teachers across the nation have been using Teacher Advisor With Watson, which is a totally free online tool, to help them access targeted lesson plans and video for math instruction. Teacher Advisor can help educators ensure that all students – regardless of cultural background or family income – master the skills necessary to succeed in their future professions.

Intelligent Energy Use Monitoring

The Nest Learning Thermostat uses AI to learn homeowner’s preferences and schedules to optimize home heating and cooling and save consumers an average of $131 to $145 in energy costs per year. The thermostat sensors can also monitor activity in a house to automatically reduce heating or cooling when nobody is home to avoid wasting energy.

Predicting Mental Illness

Researchers at Columbia University, the New York State Psychiatric Institute, and IBM have developed a machine-learning system capable of predicting if a person at risk of developing psychosis caused by schizophrenia will develop the condition with 100 percent accuracy by analyzing his or her speech, which can exhibit telltale signs of the condition. By learning to identify the speech cues in audio recordings, the system could outperform normal diagnostic models, which are about 79 percent accurate.

Fighting Trolls Online

Jigsaw, a subsidiary of Google that develops tools to promote freedom of expression and combat extremism online, has developed an artificial intelligence tool called Conversation AI designed to detect and filter out abusive language online to combat online harassment. Jigsaw trained Conversation AI on 17 million comments on New York Times articles and 13,000 discussions on Wikipedia pages to identify abusive language with 92 percent accuracy. Conversation AI is available as an open source project to allow web developers implement it for their sites as a filter that blocks abusive language in comments.

Uber’s Self-Driving Taxis

Uber have been testing their fleet of self-driving taxis for a while now. The taxis, modified Volvo SUVs, have a human in the driver’s seat to take over driving if necessary but can drive around the city to pick up and drop off passengers almost entirely autonomously. Although still not entirely ideal (there have already been incidents), as time goes on, this technology can evolve to a point where we will entrust autonomous vehicles to get us to our destinations much more than humans.

Leading Tech Companies Driving AI Forward

Artificial Intelligence is the technology that makes the world go round these days, and it is not surprising that such tech giants as IBM, Intel, Google and others use it to implement innovative ideas and help its development. Each of them has its own approach toward AI development and deliver solutions in their specific domains, with IBM providing a complex AI environment to tailor various business needs, Intel producing purpose-built neural processing units to power AI solutions and startups, Google specializing in personalizing and enhancing user web search. Among other companies that have invested in AI are NVIDIA, Spotify, Microsoft, Uber, Facebook, Apple and Salesforce.

AI Statistics and Recent Trends

The International Data Corporation (IDC) estimates that in the United States the market for AI technologies that analyze unstructured data will reach $40 billion by 2020, and will generate more than $60 billion worth of productivity improvements for businesses in the United States per year.

Investors in the United States are increasingly recognizing the potential value of AI, investing $757 million in venture capital in AI startups in 2013, $2.18 billion in 2014, $2.39 billion in 2015, and $4-5 billion in 2016. The McKinsey Global Institute estimates that by 2025 automating knowledge work with AI will generate between $5.2 trillion and $6.77 trillion, advanced robotics relying on AI will generate between $1.7 trillion and $4.5 trillion, and autonomous and semi-autonomous vehicles will generate between $0.2 trillion and $1.9 trillion.

According to a survey conducted by pwc in February 2017, despite the way the film industry and news media largely portray AI, most consumers see how it could benefit their lives. More than half agree AI will help solve complex problems that plague modern societies (63%) and help people live more fulfilling lives (59%). On the other hand, less than half believe AI will harm people by taking away jobs (46%). When it comes to a blockbuster-movie-style doomsday, only 23% believe AI will have serious, negative implications.

Another interesting finding from the survey states respondents think that by 2025 AI will most likely be able to:

- Write a top 100 Billboard song

- Create a piece of art that is worth over $100,000

- Write a hit TV series

The respondents were less optimistic, but not fully opposing that AI will be likely to:

- Author a New York Times bestseller

- Write a movie that wins an Oscar

- Win a Pulitzer prize for journalism

The survey found that 72% of the interviewed business execs believe AI will be the business advantage of the future. More than half believe that utilizing AI in business settings could boost productivity, inform business strategy, and generate growth which far outweighs

the potential downside of employment concerns.

Conclusion

Artificial intelligence has become a technology that is strongly woven into our everyday lives. Whether we seek help from such services as Google Assistant, Apple’s Siri, Microsoft’s Cortana; watch our favorite movies, listen to music and receive recommendations from Netflix or Spotify; play logical games against our computer opponent; interact with our smart home devices; add amusing filters to our photos and videos; interact with chatbots online, we are surrounded by AI technology. And that’s just from a consumer’s point of view. Businesses use this technology to work with vast amounts of data, analyze and interpret it so people can use it more efficiently. Research shows that this trending technology will not show signs of stopping in the near future.

Skynet, here we come!